Home

OCTRON is a pipeline built on napari that enables segmentation and tracking of animals in behavioral setups. It helps you to create rich annotation data that can be used to train your own machine learning segmentation models. This enables dense quantification of animal behavior across a wide range of species and video recording conditions.

OCTRON is built on Napari, Segment Anything (SAM2), YOLO, BoxMOT and 💜.

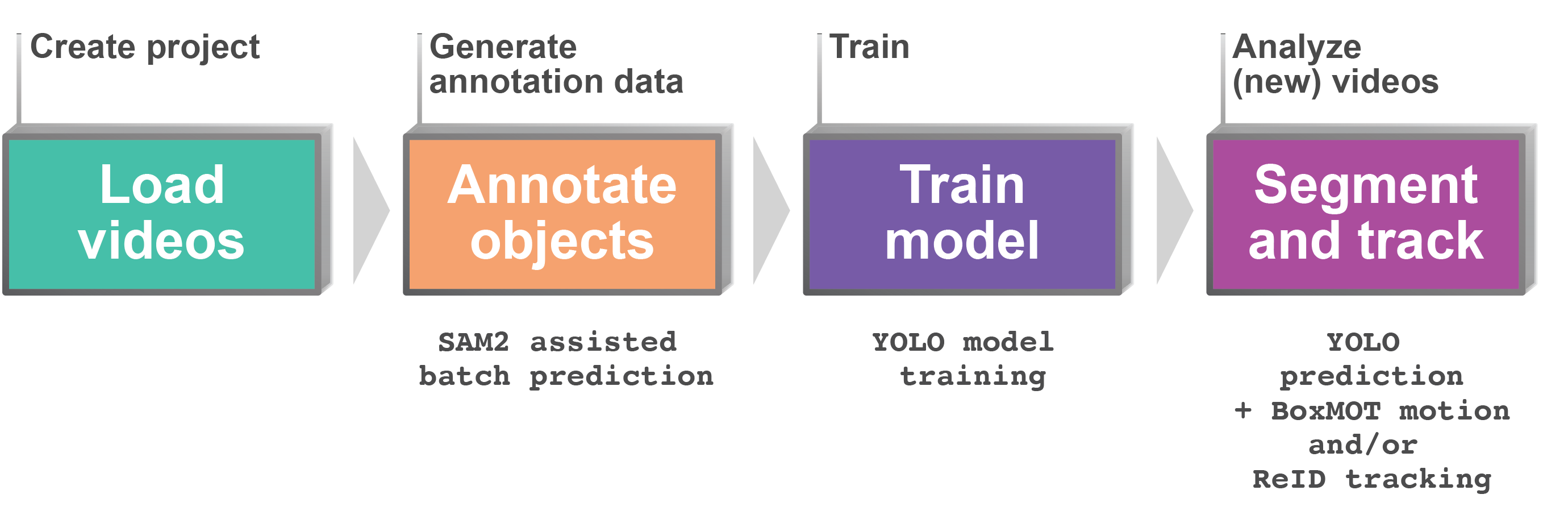

The main steps implemented in OCTRON typically include: Loading video data from behavioral experiments, annotating frames to create training data for segmentation, training machine learning models for segmentation and tracking, and finally applying models to new data for automated tracking.

How to cite

Using OCTRON for your project? Please cite this paper to share the word!

👉Add paper details

Attributions

- Interface button and icon images were created by user Arkinasi from Noun Project (CC BY 3.0)

- Logo font: datalegreya

- OCTRON mp4 video reading is based on napari-pyav

- OCTRON training is accomplished via ultralytics:

- OCTRON annotation data is generated via Segment Anything:

@article{kirillov2023segany, title={Segment Anything}, author={Kirillov, Alexander and Mintun, Eric and Ravi, Nikhila and Mao, Hanzi and Rolland, Chloe and Gustafson, Laura and Xiao, Tete and Whitehead, Spencer and Berg, Alexander C. and Lo, Wan-Yen and Doll{\'a}r, Piotr and Girshick, Ross}, journal={arXiv:2304.02643}, year={2023} } - OCTRON multi-object tracking is achieved via BoxMot trackers: